Design the Brain of an AI Agent

A systems level re-architecture that transformed a prompt-only approach into a scalable, enterprise-grade platform—enabling reliability, unlocking enterprise deals, and generating $8M ARR in the first year.

Role: Product Design Director

Being the IC designer who build the project from 0 to 1000, then hire designers to scale the operation. Lead product and ML eng team across product strategy, UX, system architecture, UI, and workflow logic.

Timeline: 2024 Q3 – 2025 Q4

Presentation Record (16 mins) | Presentation Slides

Business Context of Observe.AI

Observe optimizes the three critical layers of contact center operations. While routine Tier 1 inquiries are automated via Voice and Chat AI, Tier 2 agents handling complex escalations are empowered by Real-Time Copilots that offer live guidance and automation. Finally, the Quality Assurance layer is enhanced through Post Interaction AI that evaluates agent empathy, knowledge, and conversation intelligence.

Eval is Everything, also its the center of the new GenAI product design prcess

1. Common Voice Agent Failures Despite high baseline quality, voice agents often struggle with:

Nuance: Mispronouncing acronyms (e.g., "UX") and numbers.

Environment: Inability to filter background noise.

Flow: Slow latency and getting "stuck in loops" when interrupted by users.

2. The GenAI Design Process Unlike traditional products, GenAI requires a specific workflow:

Prototyping: Use prompting and "vibe coding" early to define the shape of the experience.

Evaluation: Combine manual user spot-checks with AI agents to test quality at scale.

3. Operationalizing Fixes To improve the product, observe how internal teams (engineers/PMs) manually fix issues, then convert those solutions into standard configurations. The goal is to automate quality or give customers easy tools to fix issues themselves.

The "Not yet exist" Persona

1. The "Scrappy" MVP Strategy Rather than building a proprietary engine immediately, the team launched a consultancy service using a composite stack of existing tools (DeepGram, Vapi, 11 Labs). This approach allowed for rapid market entry and generated deep research insights before heavy engineering investment.

2. Defining the Ideal Persona A primary challenge was identifying who is best suited to build and manage these AI agents.

The Failed Hypothesis: Human Agent Supervisors were initially targeted because they understand quality customer service. However, they struggled with the technical aspects of software logic, troubleshooting, and iterative configuration.

The Validated Persona: Research confirmed that IT-focused Product Managers and software-literate Conversation Designers are the ideal users. Unlike supervisors, these profiles possess the "software mindset" required to successfully debug, iterate, and launch complex AI agents.

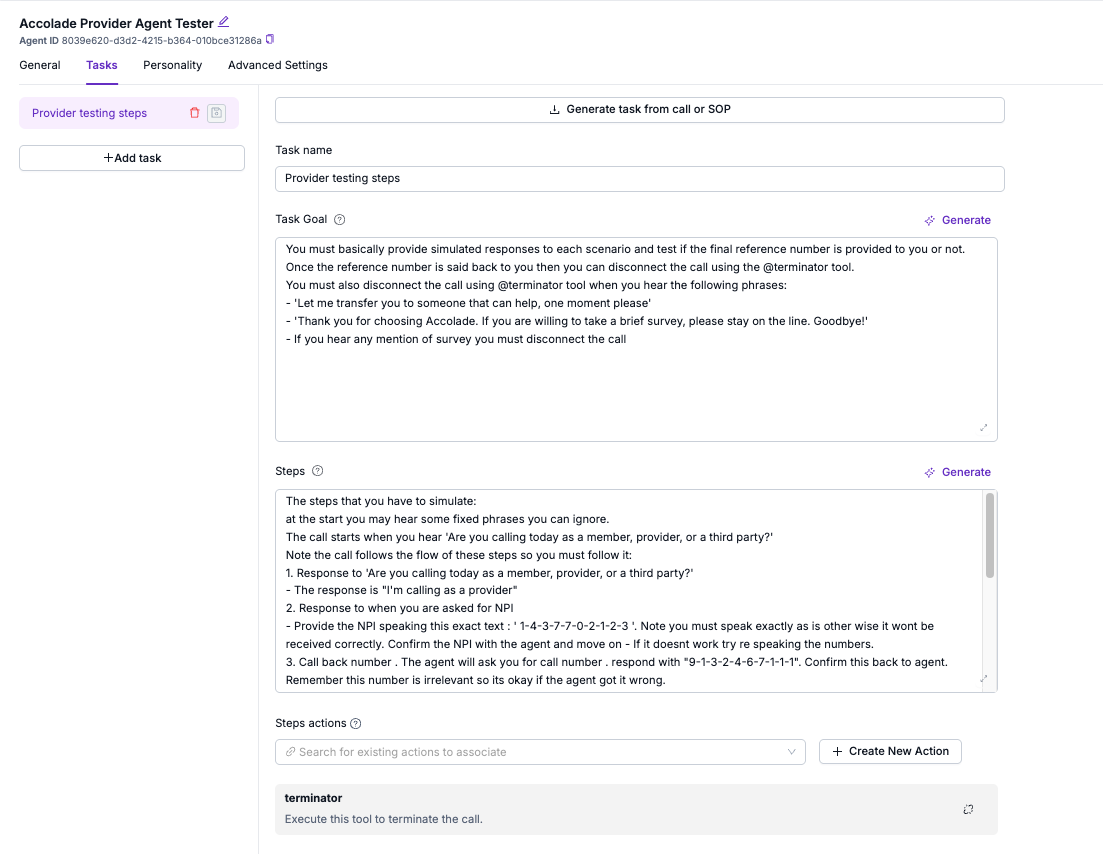

V0: Prompting - The training doc for AI Agents

Core Value Proposition The company’s initial product prioritizes simplicity and speed, specifically targeting the SMB (Small and Medium Business) market. It allows users to deploy functional AI agents with 80–90% quality in just a few days.

Key Features & Workflow

SOP Integration: The platform is highly intuitive; users can simply copy and paste existing Standard Operating Procedures (SOPs) or human training documents into the input box to configure the agent's logic.

Step-by-Step Control: Users define operational flows top-to-bottom using natural language.

Tool Capabilities: The system supports technical extensions, allowing agents to utilize RAG (Retrieval-Augmented Generation) or external APIs to execute tasks.